High-performance Computing for Visual Simulations and Rendering

- DOI

- 10.2991/jrnal.k.190828.006How to use a DOI?

- Keywords

- Render farm; visual simulation; virtual desktop infrastructure; high-performance computing

- Abstract

National Center for High-performance Computing (NCHC) built a render farm that provides a platform for the industry to render their work in a much more efficient timeframe, allowing for both central processing unit (CPU) and graphics processing unit (GPU) rendering. The throughput has also been greatly improved to support complex and large scale simulations. The main goal of this session is to introduce the improvements and new applications of NCHC’s render farm.

- Copyright

- © 2019 The Authors. Published by Atlantis Press SARL.

- Open Access

- This is an open access article distributed under the CC BY-NC 4.0 license (http://creativecommons.org/licenses/by-nc/4.0/).

1. INTRODUCTION

Most people have seen an animated film at least once in their lifetime, but the number of work hours put into each film has often been underestimated. High-quality animations such as a Pixar film can take anywhere from 4 to 7 years from start to finish [1].

Any computer graphics created will need to be rendered before it is viewable as a high-resolution image. However, rendering takes a lot of computing power, especially for animated films where the content is relying entirely on computer graphics. Therefore, it is essential for animation studios and other related industries to have a high-performance computing system to prevent excessive time spent on rendering.

National Center for High-performance Computing (NCHC) [2] at National Applied Research Laboratories of Taiwan built a render farm, which composes of a cluster of networked computers, including 70 machines and 32 cores; with goals to improve the film industry in Taiwan. The render farm can be directly accessed through Virtual Desktop Infrastructure (VDI), which allows users to take advantage of this platform with any device available to them at any location. Since a powerful computer is accessed through VDI, users can also use the virtual desktop to create visual simulations. This was intended for students who may not have access to a thoroughly equipped device when needed. This also means that users can use the render farm and still do work on their own device without interference, allowing companies to work more efficiently.

One of the long-term goals at NCHC is to bring forward the culture aspect of Taiwan with our developing technology, by working collaboratively with the creative industry. So far, over 100 films in Taiwan were rendered using this platform.

2. BACKGROUND

All computer graphics need to go through the process of rendering. In the film industry, rendering can be understood as to how a computer draws an image; by calculating the color of each pixel. A filmmaker, Sudhakaran [3], described rendering as the process when an image contains a pixel that is red, and a filter is added to make the red pixel blue, the software will have to recreate this blue pixel when it exports the finished image.

In three-dimensional (3D) animation and Computer Generated Imagery (CGI) found in films, the 3D contents modeled in the computer needs to be rendered to high-resolution images before it can be composited. To render a video is the same as rendering a sequence of images. If an object moves slightly to the right side of the frame as each frame renders, the sequence of images would appear as if the object is moving to the right when it is being played one right after another like a flip book. Animated films are normally set to 24 frames per second. The more information an image has, in terms of shape, texture, lighting, etc., the more render time each image will take.

As technology in CGI advances, filmmakers will often choose to use virtual props in their scenes; because in most cases, it is an easier and cheaper option than to build them in real life. These visual effects can often be so realistic that the audience is convinced that it is actual footage filmed with real-life setups. Whether it is photo-realistic renders of CGI in live action films or non-photorealistic renders of animated films, they both use simulations that emulate dynamic behavior of the natural system in physics, chemistry, etc., to make the animation more believable to the audience.

Visual simulations are computer graphics that changes its form or condition to show the results of calculations done by computers, in which variables are produced based on the given inputs and algorithms [4]. The inserted algorithms can determine particles used in special effect, elements of physics, or any variable that can be calculated. This can be useful for creating graphics that emulate the real world; such as previsualizing architecture under various circumstances prior to the building process, or staging scenes in movies that cannot be done physically. It can also be used in 3D animation. Making visual simulations require a computer with enough power to run image editing or 3D modeling software.

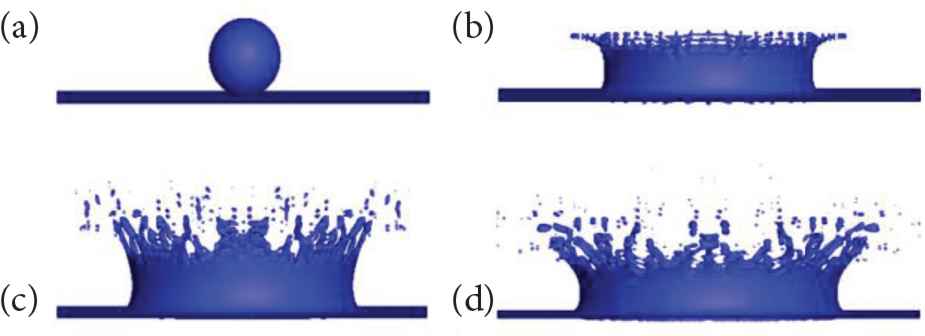

Mathematical algorithms are used for a computer to simulate a mathematical model that is able to calculate the behavior of dynamics such as fur, fire, or smoke. An example from NCHC that was presented at Siggraph Asia 2014, shows how the computer can simulate a water crown splash through computing, as illustrated in Figure 2. Instead of animating fluid frame-by-frame, a simulation can be generated with information such as the size or the speed of the water drop. Once all the information is gathered, the computer can imitate the reaction of a drop of fluid hitting surface from some distance above. Figure 2 shows the result of a simulation over time when researcher used a mesh size of 400 × 400 × 200 in the phase-field and momentum equations [5].

Cloud Render Farm at NCHC (National Center for Highperformance Computing 2016) [2].

Milkcrown simulation using OpenACC accelerated framework (P-H. Chiu 2011) [5].

3. CONCERNED CONTENTS

With all the information required for a computer to calculate when rendering, the amount of time and computer power to complete even just one frame of render can be extensive. To put it in perspective: a Pixar film using as many as 2000 computers, will take up to 29 h just to render a single frame [6]. Considering how there are 24 frames in 1 s, 60 s in 1 min, and about 100 min in a film, a render farm becomes essential for rendering a 3D animated film. This is also the reason that planning the scene carefully before sending it to render is so important. There would be too much time wasted if a shot was not successful within one take.

At big animation studios such as Pixar, resources for rendering would not be a problem, because they are able to purchase a computer cluster of their own. However, students or other smaller studios would not have the same access to these resources. For many students, they might not even be able to get a single computer that is powerful enough to run the software to create 3D contents. One of the issues occurred in Taiwan is that there are many talented students who do not have the tools at hand for their creativity. To help these students, as well as the smaller studios in Taiwan, NCHC has built a high-performance computing platform and provided a render farm service for them to use.

4. METHODOLOGIES

The 3D production pipeline is mainly split into three groups: pre-production, production, and post-production. Pre-production is how a story is formed, which includes coming up with an idea, laying out the story into storyboards, and creating concept designs. Production is where High-Performance Computing (HPC) becomes helpful; it includes 3D modeling, texturing, rigging/setup, animating, Visual Effects (VFX), lighting, and rendering. Finally, post-production takes place after all the rendered animations are put together for compositing, and would need to be rendered again after adding 2D VFX/motion graphics and color correction [7].

National Center for High-performance Computing uses a VDI as a solution for allowing access to a stronger computer with the appropriate software. This is helpful for users who do not have the resources in either hardware or software when creating 3D contents. VMware Horizon was used to create an environment where the Graphics Processing Unit (GPU) render farm at NCHC can be accessed virtually like a cloud studio. It comprises package kits such as vSphere ESXi hypervisor, vCenter server, View Connection server, View Composer service, etc. To access the virtual desktop via VMware Horizon Client, users can simply insert the assigned IP with a username and a password. The H.264 encoding, accelerated by the GPU of the render farm, allows users whose account is restricted by a limited network to have a smooth experience with the virtual desktop [8].

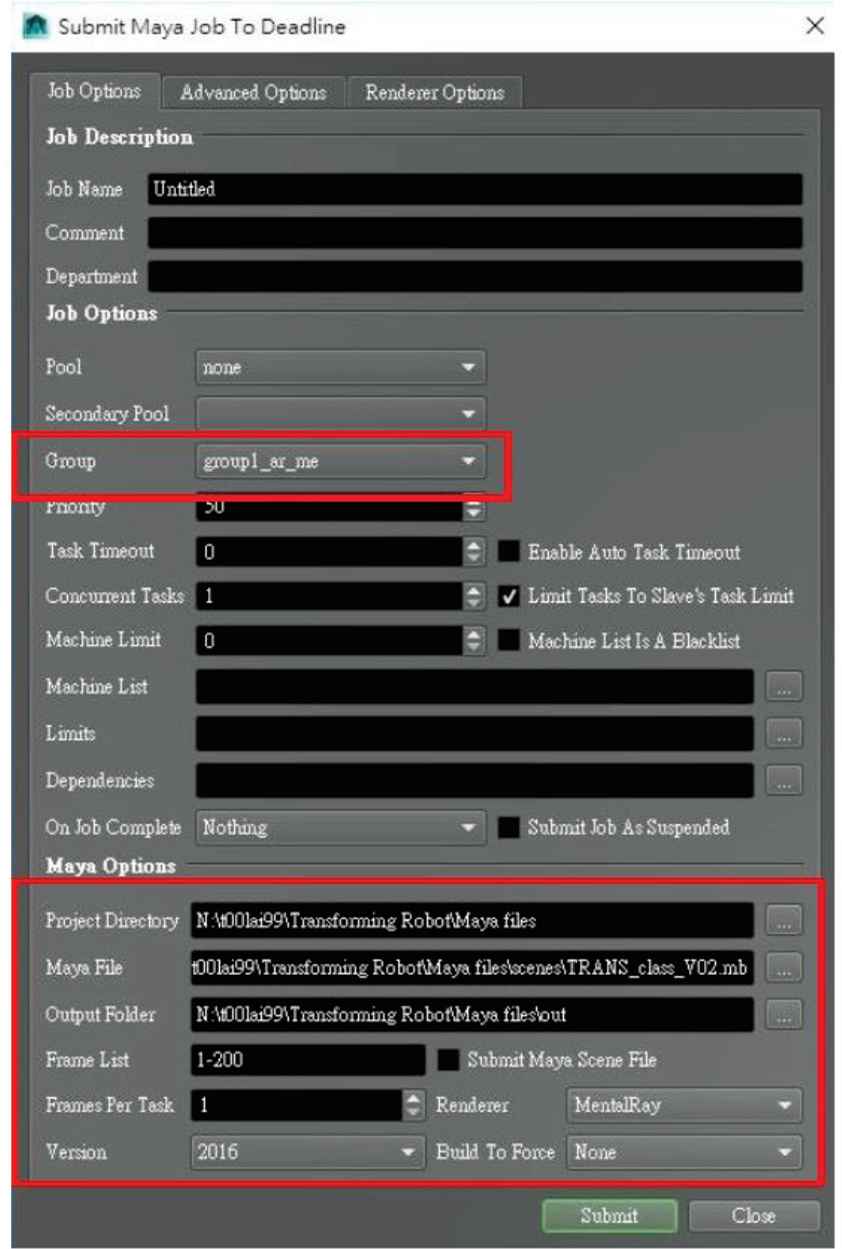

Authorized software and programs are also available for use on the virtual desktop known as cloud studio. This includes animation software such as Maya, and 3Ds Max; ray-tracing renderers such as Arnold and V-Ray; and visual effects simulators such as RealFlow. The HPC at NCHC includes Deadline, a compute management toolkit that helps to organize rendering tasks sent to the GPU render farm. NCHC’s render farm provides easy access for users through cloud studio, which enables the production team to check their progress as each scene renders; this allows users to find mistakes in earlier stages of the render process. To render an image using NCHC’s render farm, the user would need to do the following: submit their project file to Deadline, set the desired renderer by selecting groups, set the project directory, choose the project file, insert the range of frames that needs to be rendered, and link the output to a file location. This is shown in Figure 4.

Areas where the NCHC render farm/VDI can help in the 3D animation pipeline (illustrated by author).

Screenshot showing the Maya interface for submitting a Maya job to Deadline custom user interface.

Remote virtual desktop, with resources for a complete production pipeline and batch renderer cluster, avoids the need to transfer large files, which is often a problem found in traditional render farms. The platform was designed so that users are able to use the cloud studio to work on their projects from start to finished; it also provides the option to upload files from a personal device through file transfer applications like FileZilla, if the user wishes to finish a work-in-progress. Cloud studio also enables an easier way to cooperate on a project, with a high throughput and Input/Output Operation per Second (IOPS) performance of the storage system to support multi-user.

Moreover, NCHC render farm has expanded since 2018. The new render farm has improved 88 times the efficiency of the original render farm; throughput was tested to reach 2.175 GB/s, which is 10 times more efficient than the old render farm. Three more layers of security were added to further secure user’s properties, including two-factor authentication, file protection system, and lateral movement protection.

5. POSSIBLE OUTCOME

The GPU render farm at NCHC renders about 10 times faster than a CPU render farm. Moreover, it supports real-time rendering. An example of real-time rendering using NCHC’s GPU render farm is a dance with interactive holographic projections, performed at the experimental performance lab at NCHC headquarters. Through the usage of the Kinect, a motion-tracking camera, a dancer was able to create an interactive performance using both the camera and a transparent projection screen in front of him (Figure 5).

Dance with interactive holographic projection performed at NCHC’s experimental performance lab (C. Lien 2016).

The ability to perform real-time rendering, opens the possibility of incorporating Virtual Reality (VR) and Augmented Reality (AR) to HPC. VR and AR technology has been improving over the years, but it has not yet become popularized. Once VR and AR headset are connected to HPC through VDI, they will no longer be limited by the power of a personal device, but with the power of HPC. It has been tested that any device supported by VMware Horizon can log into NCHC’s cloud studio.

National Center for High-performance Computing plans on acquiring more advanced software and plugin for the render farm. For example, a grooming plugin allows the user to create more realistic fur and is able to simulate the way it moves or reacts with objects. With new tools at hand, users can create a more advanced visual for their content. The amount of time the render farm can save in rendering provides the opportunity to create a better product with the same amount of time spent.

6. CONCLUSION

Simulations are not only used in animations and films, but also used for research or experimentation in many other industries. However, simulations are only numbers in the computer without rendering it into visual content. Whether the content is being rendered in real time or not, it is a computer power intensive job, in which a high-performance computing system would become helpful. Shortening the time needed for rendering improves efficiency in workflow, and experiences involving interaction would only improve in graphic and latency with the help of HPC.

CONFLICTS OF INTEREST

The authors declare they have no conflicts of interest.

Authors Introduction

Ms. Jasmine Wu

She received her BFA from Syracuse University in 2017. She is a Research Assistant at National Center for High-performance Computing of National Applied Research Laboratories.

She received her BFA from Syracuse University in 2017. She is a Research Assistant at National Center for High-performance Computing of National Applied Research Laboratories.

Dr. Chia-Chen Kuo

She received her PhD in Environmental Engineering from National Chiao Tung University (NCTU) in 2011. She is a Research Fellow at National Center for High-performance Computing of National Applied Research Laboratories. She is a Jointly Appointed Professor at National Chiao Tung University for Department of Computer Science.

She received her PhD in Environmental Engineering from National Chiao Tung University (NCTU) in 2011. She is a Research Fellow at National Center for High-performance Computing of National Applied Research Laboratories. She is a Jointly Appointed Professor at National Chiao Tung University for Department of Computer Science.

REFERENCES

Cite this article

TY - JOUR AU - Jasmine Wu AU - Chia-Chen Kuo PY - 2019 DA - 2019/09/10 TI - High-performance Computing for Visual Simulations and Rendering JO - Journal of Robotics, Networking and Artificial Life SP - 101 EP - 104 VL - 6 IS - 2 SN - 2352-6386 UR - https://doi.org/10.2991/jrnal.k.190828.006 DO - 10.2991/jrnal.k.190828.006 ID - Wu2019 ER -